You’re reviewing a pull request when you hit a function that makes you pause. Something feels wrong, but you can’t articulate why. Your instinct says this code will be a maintenance nightmare, but the tests pass and the feature works.

These moments of unease often signal specific complexity patterns that make code harder to understand, modify, and debug. Learning to recognize these patterns systematically transforms code reviews from subjective discussions into objective quality assessments.

1. Deep Conditional Nesting

The Problem: When conditionals nest three or more levels deep, understanding all execution paths becomes overwhelming.

def process_payment(user, amount, payment_method):

if user.is_active:

if amount > 0:

if payment_method == "credit_card":

if user.credit_limit >= amount:

return charge_credit_card(user, amount)

else:

return "Payment declined: insufficient credit"

else:

return "Unsupported payment method"

else:

return "Invalid amount"

else:

return "User account inactive"

Why It’s Problematic: Each nesting level doubles execution paths, making thorough testing difficult.

Quick Fix: Use early returns to flatten structure:

def process_payment(user, amount, payment_method):

if not user.is_active:

return "User account inactive"

if amount <= 0:

return "Invalid amount"

if payment_method != "credit_card":

return "Unsupported payment method"

if user.credit_limit < amount:

return "Payment declined: insufficient credit"

return charge_credit_card(user, amount)

2. Oversized Functions

The Problem: Functions exceeding 50 lines or handling multiple responsibilities become difficult to understand and modify safely.

def generate_user_report(user_id):

user = database.get_user(user_id)

orders = database.get_user_orders(user_id)

# Calculate stats

total_spent = 0

category_stats = {}

for order in orders:

total_spent += order.amount

category_stats[order.category] = category_stats.get(order.category, 0) + 1

# Format and send report

report_html = f"<h1>Report for {user.name}</h1>"

report_html += f"<p>Total Spent: ${total_spent}</p>"

email_service.send(user.email, "Your Monthly Report", report_html)

return report_html

Why It’s Problematic: This function handles data fetching, calculation, formatting, and email sending. Changes to any concern require modifying the entire function.

Quick Fix: Extract functions for each responsibility:

def generate_user_report(user_id):

user_data = fetch_user_data(user_id)

stats = calculate_user_stats(user_data)

report_html = format_report(user_data.user, stats)

email_service.send(user_data.user.email, "Your Monthly Report", report_html)

return report_html

3. Duplicated Logic

The Problem: Similar code blocks with slight variations create maintenance burdens and inconsistent behavior.

def process_vip_order(order):

if order.amount > 1000:

order.apply_discount(0.15)

order.set_priority("HIGH")

order.set_processing_fee(0)

send_notification(order.customer_id, "VIP order processed")

def process_regular_order(order):

if order.amount > 500:

order.apply_discount(0.05)

order.set_priority("NORMAL")

order.set_processing_fee(2.99)

send_notification(order.customer_id, "Order processed")

Why It’s Problematic: Business logic changes require updates in multiple places, leading to potential inconsistencies.

Quick Fix: Extract common logic and parameterize differences:

def process_order(order, order_type):

apply_order_type_discount(order, order_type)

set_order_type_priority(order, order_type)

set_order_type_processing_fee(order, order_type)

send_notification(order.customer_id, order_type.get_notification_message())

4. Magic Numbers

The Problem: Hardcoded values scattered throughout code make business rules difficult to understand and modify consistently.

def calculate_shipping(weight, distance, is_express):

base_cost = weight * 0.5

if distance > 500:

base_cost *= 1.8

elif distance > 100:

base_cost *= 1.25

if is_express:

base_cost *= 2.0

return max(base_cost, 5.99)

Why It’s Problematic: Business rules are buried in magic numbers. Changing shipping policies requires hunting through code.

Quick Fix: Extract constants with descriptive names:

WEIGHT_RATE = 0.5

LONG_DISTANCE_THRESHOLD = 100

LONG_DISTANCE_MULTIPLIER = 1.25

VERY_LONG_DISTANCE_THRESHOLD = 500

VERY_LONG_DISTANCE_MULTIPLIER = 1.8

EXPRESS_MULTIPLIER = 2.0

MINIMUM_SHIPPING_COST = 5.99

def calculate_shipping(weight, distance, is_express):

base_cost = weight * WEIGHT_RATE

if distance > VERY_LONG_DISTANCE_THRESHOLD:

base_cost *= VERY_LONG_DISTANCE_MULTIPLIER

elif distance > LONG_DISTANCE_THRESHOLD:

base_cost *= LONG_DISTANCE_MULTIPLIER

if is_express:

base_cost *= EXPRESS_MULTIPLIER

return max(base_cost, MINIMUM_SHIPPING_COST)

5. Poor Error Handling

The Problem: Exception handling added as an afterthought, often catching overly broad exceptions or providing meaningless error information.

def process_user_data(json_data):

try:

user = json.loads(json_data)

database.save_user(user)

email_service.send_welcome_email(user['email'])

return "Success"

except Exception as ex:

return "Error occurred"

Why It’s Problematic: Different failure modes (invalid JSON, database errors, email failures) all return the same generic message, making debugging impossible.

Quick Fix: Handle specific exceptions and provide meaningful error information:

def process_user_data(json_data):

try:

user = json.loads(json_data)

database.save_user(user)

try:

email_service.send_welcome_email(user['email'])

except EmailException as ex:

logger.warning(f"Welcome email failed for user {user['id']}", ex)

return UserProcessingResult.success()

except json.JSONDecodeError as ex:

return UserProcessingResult.failure("Invalid user data format")

except DatabaseException as ex:

return UserProcessingResult.failure(f"Database error: {ex}")

6. Excessive Dependencies

The Problem: Functions calling many other functions or depending on numerous modules become difficult to test and understand.

def generate_invoice(order_id):

order = order_service.get_order(order_id)

customer = customer_service.get_customer(order.customer_id)

tax = tax_service.calculate_tax(order, customer.location)

shipping = shipping_service.calculate_shipping(order, customer.location)

template = template_service.get_invoice_template(customer.type)

pdf = pdf_service.generate_pdf(template, {

'order': order, 'customer': customer, 'tax': tax, 'shipping': shipping

})

storage_service.save_invoice(pdf, order_id)

notification_service.send_invoice(customer.email, pdf)

return pdf

Why It’s Problematic: This function depends on multiple services, making it fragile to changes and extremely difficult to test.

Quick Fix: Extract data gathering and delegate to specialized functions:

def generate_invoice(order_id):

invoice_data = gather_invoice_data(order_id)

pdf = create_invoice_pdf(invoice_data)

process_invoice_delivery(pdf, invoice_data.customer, order_id)

return pdf

7. Over-commented Logic

The Problem: When comments explain what code is doing (rather than why), it often indicates the code itself is too complex.

def calculate_loyalty_points(purchase_amount, customer_tier, is_birthday_month):

# Check if purchase meets minimum threshold

if purchase_amount > 10:

# Calculate base points as 1 point per dollar

base_points = int(purchase_amount)

# Apply tier multiplier: bronze = 1x, silver = 1.5x, gold = 2x

if customer_tier == "bronze":

multiplied_points = base_points * 1

elif customer_tier == "silver":

multiplied_points = base_points * 1.5

elif customer_tier == "gold":

multiplied_points = base_points * 2

# Add 25% birthday bonus if applicable

if is_birthday_month:

birthday_bonus = multiplied_points * 0.25

final_points = multiplied_points + birthday_bonus

else:

final_points = multiplied_points

return final_points

else:

return 0

Why It’s Problematic: Comments describe what each line does rather than explaining business logic, suggesting unclear code structure.

Quick Fix: Make code self-documenting through better structure and naming:

MINIMUM_PURCHASE_FOR_POINTS = 10

TIER_MULTIPLIERS = {"bronze": 1, "silver": 1.5, "gold": 2}

BIRTHDAY_BONUS_RATE = 0.25

def calculate_loyalty_points(purchase_amount, customer_tier, is_birthday_month):

if purchase_amount < MINIMUM_PURCHASE_FOR_POINTS:

return 0

base_points = int(purchase_amount)

tier_points = base_points * TIER_MULTIPLIERS[customer_tier]

birthday_bonus = tier_points * BIRTHDAY_BONUS_RATE if is_birthday_month else 0

return tier_points + birthday_bonus

Manual vs. Automated Detection

Experienced developers can spot many complexity patterns during reviews, but human recognition has limitations. Reviewers might miss issues in unfamiliar code areas or focus on style while overlooking structural problems.

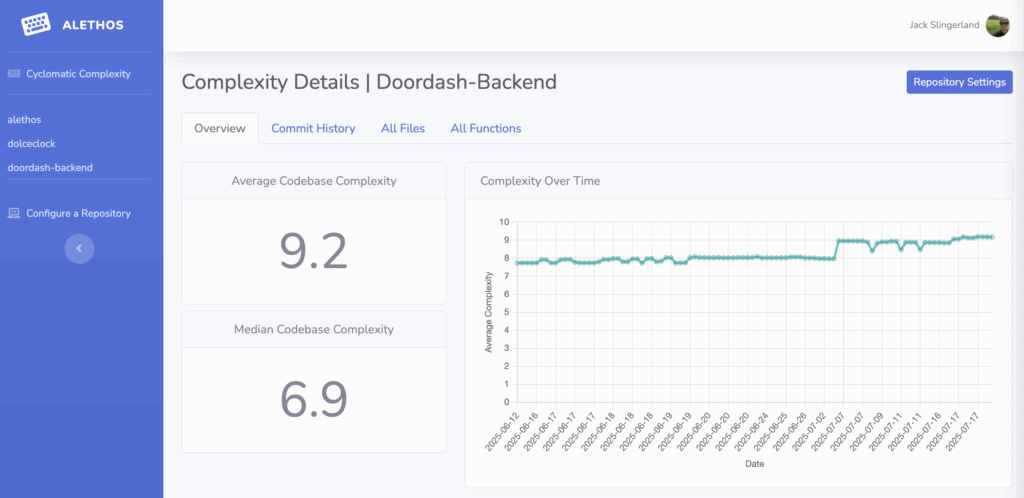

Modern complexity analysis tools like Alethos excel at systematically identifying patterns that reviewers miss. They provide objective measurements that supplement human judgment with data-driven insights, detecting not just individual patterns but combinations that create complexity hotspots.

Taking Action

When you spot these patterns, response depends on scope:

Immediate fixes work for magic numbers, simple nesting, and basic duplication. Address these within the current pull request.

Larger refactoring may be needed for oversized functions or extensive duplication. Consider follow-up tickets rather than blocking current changes.

Systematic patterns across multiple files indicate process issues requiring team discussion and coding standards updates.

Building Team Recognition

The goal isn’t perfect detection but consistent improvement. Teams that regularly discuss these patterns develop better instincts for spotting complexity before it accumulates.

Consider establishing complexity thresholds as part of your definition of done. When functions exceed certain metrics or exhibit multiple patterns, trigger additional review requirements.

Code complexity isn’t inevitable. It’s a series of small decisions that compound over time. Learning to recognize these seven patterns gives you the tools to make better decisions and catch complexity before it becomes technical debt.